MSA Attribute Data Study

MSA attribute data study lets you recognize and understand errors in collecting attribute data. Process variation affects how resulting products and services appear to customers. However, what you (and ultimately the customer) see as the appearance usually does not include only the variability in the item itself, but also some variation from the item's measurement.

A simple example of this is to pick up a familiar object, such as this site. Lets say you need to judge if the lettering on this page was crisp. You then send the same page to three other people. Most likely there would be a difference in answers among everyone. Later, someone sends you the same page (without you knowing it was the same page) and asked you to measure it. Again, most likely you will come to a different answer or conclusion. The page itself has not changed. Your measurement system caused the difference in the answers.

Includes an easy to edit Calibration Manual, recommended calibration system, reports and templates.

MSA stands for Measurement System Analysis. MSA attribute data study measures the errors within your measurement system. The higher the measurement error, the harder to understand the true process capability and behavior.

It is crucial to analyze your measurement system with a MSA attribute data study before embarking on any Process Improvement activities.

The sole purpose of a measurement system in lean six sigma is to collect the right data to answer the questions being asked. To do this, the Team must be confident in the integrity of the data being collected. To confirm data integrity the team must know

- The type of data

- If the available data is usable

- If the data is suitable for the project

- If it is not suitable, whether it can be made usable

- How the data can be audited

- If the data is trustworthy

To answer these questions, Data Integrity is broken down into two elements:

1) Validity. Is the "right" aspect of the process or product being measured? The data might be from a reliable method or source, but still not match the operational definitions established for the project.

2) Reliability. Is the valid measurement system producing good data? This considers the accuracy and consistency of the data.

Validity is covered in MSAValidity. Reliability is dependent on the data type. We cover MSA attribute data on this page. We cover variable measurement systems here

This Data Analysis Video teaches you the basic tools for understanding, summarizing, and making future predictions with your collected data. Includes MS Excel templates.

A MSA Attribute data study is the primary tool for assessing the reliability of a qualitative measurement system. Attribute data has less information content than variables data but often it is all that's available and it is still important to be diligent about the integrity of the measurement system. Attribute inspection generally does one of three things:

- Classifies an item as either Conforming or Nonconforming

- Classifies an item into one of multiple categories

- Counts the number of "non-conformities" per item inspected

Thus, a "perfect" MSA attribute data system would

- Correctly classify every item

- Always produce a correct count of an item's non-conformities

Some attribute inspections require little judgment because the correct answer is obvious. For example, in destructive test results, the entity either broke or remained intact. In the majority of cases, however, attribute inspection is extremely subjective. For such a measurement system, if many appraisers are evaluating the same thing they need to agree

- With each other

- With themselves

- With an expert's opinion

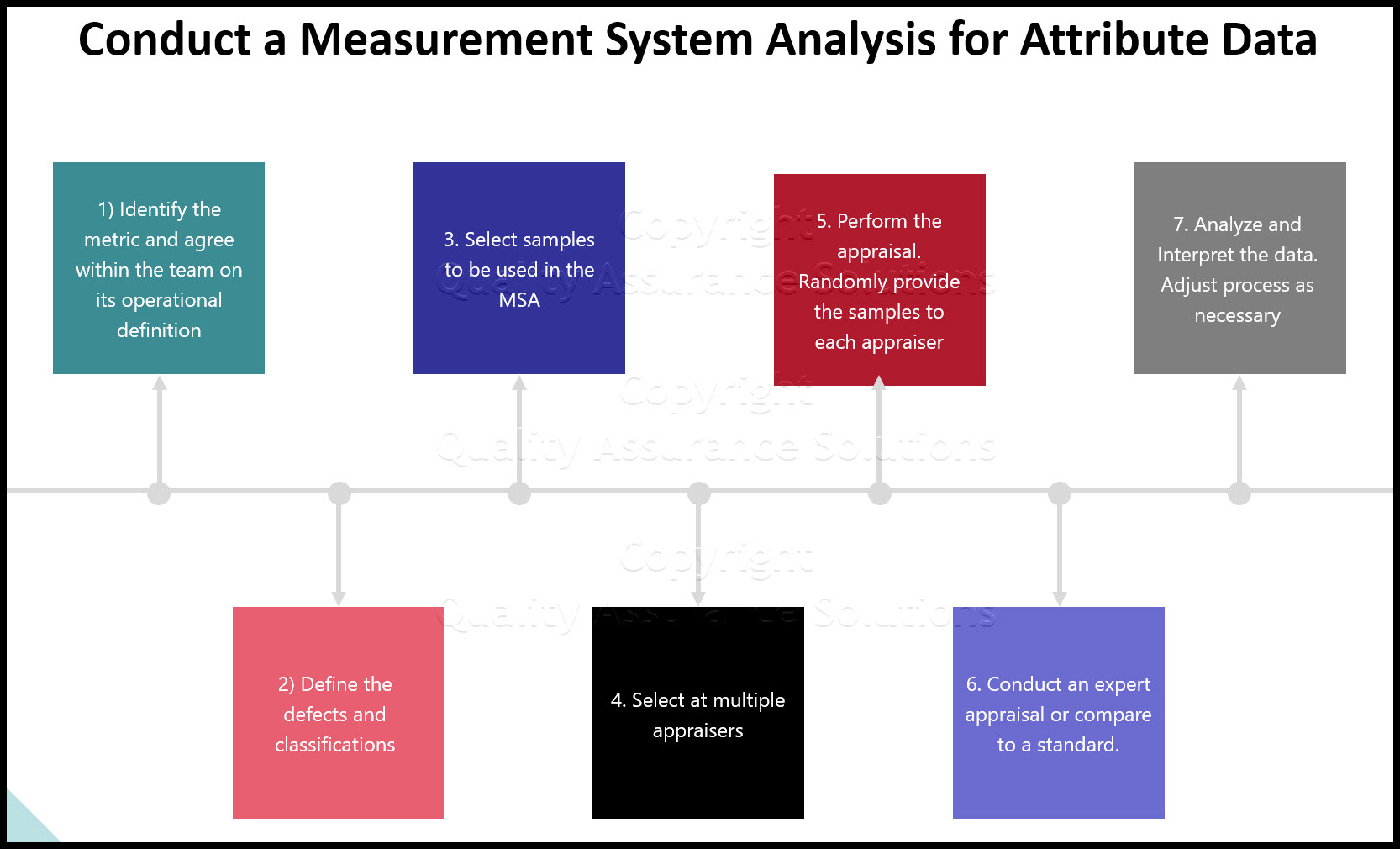

Roadmap

The roadmap to planning, implementing. collecting data for a MSA attribute data follows:

Step 1. To start the MSA attribute data study, identify the metric and agree within the team on its operational definition (KPOVs and Data). Often the exact measurement terms isn't immediately obvious.

For example, in many transactional service processes, it could be the initial writing of the line items to an order, the charging of the order to a specific account, or the translation of the charges into a bill. Each of these might involve a separate classification step.

Step 2. Define the defects and classifications for what makes an item defective. These should be mutually exclusive (a defect cannot fall into two categories) and exhaustive. If an item is defective it must fall into at least one defined category,

If done correctly every entity must fall into one and only one category.

Snap Sampling Plans! software combines all the key industry standard attribute sampling plans into one place.

With over 10,000 sampling possibilities, Snap Sampling Plans! guides you in selecting the correct AQL sampling plan.

Step 3. Select samples to be used in the MSA. Use a sample size calculator. From 30 to 50 samples are necessary. The samples should span the normal extremes of the process with regards to the attribute being measured.

Measure the samples independent from one another. The majority of the samples should be from the "gray" areas, and a few from clearly good and clearly bad.

For example, for a sample of 30 units, five units might be clearly defective and five units might be clearly acceptable. The remaining samples would vary in quantity and type of defects.

Step 4. Select at least 10 appraisers to conduct the MSA. These should be people who normally conduct the assessment.

Step 5. Perform the appraisal. Randomly provide the samples to each appraiser (without them knowing which sample it is or the other appraisers witnessing the appraisal) and have him classify the item per the defect definitions.

After the first appraiser has reviewed all items, repeat with the remaining appraisers. Appraisers must inspect and classify independently.

After all appraisers have classified each item, repeat the whole process for one additional trial.

Step 6. Conduct an expert appraisal or compare to a standard.

In Step 5 the appraisers were compared to themselves (Repeatability) and to one another (Reproducibility).

If the appraisers are not compared to a standard, the team might gain a false sense of security in the Measurement System.

Learn SPC in an hour. Train your employees. Improve your processes and products. Prevent defects and save your company money.

Step 7.Enter the data into a statistical software package such as Minitab and analyze it. Data is usually entered in columns (Appraiser, Sample, Response, and Expert). The analysis output typically includes

- Percentage overall agreement

- Percentage agreement within each appraisers(Repeatability)

- Percentage agreement between appraisers (Reproducibility)

- Percentage agreement with known standard (Accuracy)

Interpreting The Output

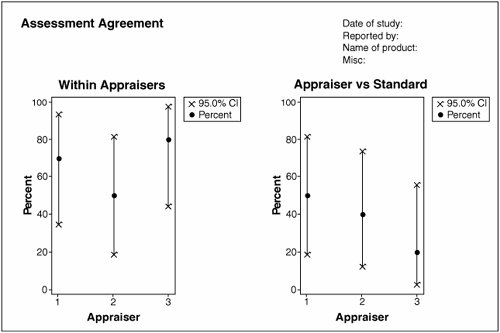

The figure below shows an example of a graphical output from an MSA Attribute data study. The left side of the graph shows the agreement within the appraisers (analogous to Repeatability. The right shows the agreement between the appraisers and the standard. The dots represent the actual agreement from the study data. The crosses represent the bounds of a 95% confidence interval prediction for the agreement average.

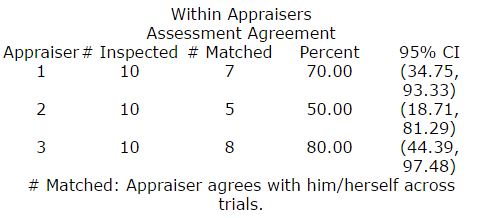

The associated within Appraiser statistics are shown below. For example, Appraiser 1 agreed with himself in seven out of the ten samples across the two trials. Moving forward, agreement would likely be somewhere between 34.75% and 93.33% (with 95% confidence). To gain a narrower confidence interval, more samples or trials would be required. To be a good, reliable Measurement System, agreement needs to be 90% or better.

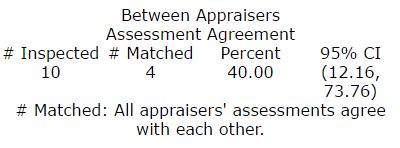

The associated between appraiser statistics are shown below for the MSA attribute data study. The appraisers all agreed with one other in four out of the ten samples. Moving forward, agreement would likely be somewhere between 12.16% and 73.76% (with a 95% degree of confidence). To be a reliable measurement system, agreement needs to be 90% or better, which is clearly not the case here.

Includes an easy to edit Calibration Manual, recommended calibration system, reports and templates.

Reasons MSA Attribute Data Fails

After the MSA attribute data has been analyzed, the results usually show poor reliability for attribute data. This is mainly due to sheer number of ways these types of measurement systems can fail:

Appraiser

- Visual acuity (or lack of it)

- Misinterpretation of the reject definitions

- Individual method of inspection adopted

Appraisal

- Defect probability. If this is very high, the appraiser tends to reduce the stringency of the test. The appraiser becomes numbed or hypnotized by the sheer monotony of repetition. If this is very low, the appraiser tends to get complacent and tends to see only what he expects to see. This happens in spite of good visual acuity.

- Fault type. Some defects are far more obvious than others.

- Number of faults occurring simultaneously. If this is the case, the appraiser must judge the correct defect category.

- Not enough time allowed for inspection.

- Infrequent appraiser rest periods.

- Poor illumination of the work area.

- Poor inspection station layout. Quite often there isn't enough space to conduct the test effectively. Sometimes dedicated space is not provided at all.

- Time of day and the working length of time for the appraiser.

- Poor objectivity and clarity of conformance standards and test instructions.

Organization and environment

- Appraiser training and certification.

- Peer standards. Defectives are often deemed to reflect badly on coworkers, so the appraiser is constantly under pressure to artificially reduce the defect rates.

- Management standards. Appraisers often report to someone who is accountable for the volume of shipped product. Reduced volumes reflect poorly on this individual, so they sometimes unconsciously apply pressure on the appraiser to ensure volumes remain high and defects slip by.

- Knowledge of operator or group producing the item.

- Proximity of inspectors.

- Re-inspection versus immediate shipping procedures.

Given all the preceding possibilities for failure it should be apparent why we strongly encourage you to move to continuous metrics over attribute ones. If only attribute measures are feasible, there are some actions that help improve reliability of the metric, but there really are no guarantees in this area:

Snap Sampling Plans! software combines all the key industry standard attribute sampling plans into one place.

With over 10,000 sampling possibilities, Snap Sampling Plans! guides you in selecting the correct AQL sampling plan.

Suggestions To Improve MSA Attribute Data

1 Set very clear operational definitions of the metric and defect classifications.

2 Train and certify appraisers and revisit this on a regular basis.

3 Break up the numbing rhythm with pauses.

- Introduce greater variety into the job by giving a wider assortment of duties or greater responsibility

- Arrange for frequent job rotation.

- Introduce regular rest periods.

4- Enhance faults to make them more detactable.

- Use sense multipliers optical magnifiers, visual inspection equipment, sound amplifiers, and other devices to expand the ability of the unaided human to sense the defects/categories.

- Use masks to block out the inspectors view of irrelevant characteristics to allow focus on key responsibilities.

- Use templates. For example, a cardboard template with alignment holes. Place the template over the object. Holes in the template mate with the projecting object terminals and also serve as a gage for size. Any extra or misplaced figure prevents the template from seating properly. Missing object items become evident because the associated hole is empty.

- Use overlays, transparent visual aids with tolerance guidelines. The guidelines simplify judging the size or location of product elements.

- Use checklists such as a pre-flight checklist on aircraft.

- Consider product redesign. In some situations, the product design makes access difficult, places needless complexity or an inspection burden on the inspectors. In such cases, product redesign helps reduce inspector errors, as well as operator errors.

- Use error proofing. There are many forms of this, such as redundancy, countdowns, and fail-safe methods

- Automation of repetitive inspection. Automation makes no inadvertent errors after the setup is correct and stable. Clearly there are limitations due to cost and the current state of technology.

- Use visual aids. Keep an appraiser from relying on memory of the standard. Provide the appraiser with a physical standard or photographs of the defects. For example, the automobile industry uses painted plates exhibiting several scratches of different measured width to help define defects in the paint finish.

MSA attribute data systems are certainly the most difficult to improve. Continually check for appraiser understanding. Capture data on a routine basis on the proportion of items erroneously accepted or rejected. Apply Statistical Process Control to the Measurement System based on this data.

- QAS Home

- Calibration

- MSA Attribute Data Study

|

Quality Assurance Solutions Robert Broughton (805) 419-3344 USA |

|

|

Software, Videos, Manuals, On-Line Certifications | ||

|

450+ Editable Slides with support links | ||

|

Corrective Action Software | ||

|

Plan and Track Training | ||

|

AQL Inspection Software |

|

Learn and Train TRIZ | ||

|

Editable Template | ||

|

Templates, Guides, QA Manual, Audit Checklists | ||

|

EMS Manual, Procedures, Forms, Examples, Audits, Videos | ||

|

On-Line Accredited Certifications Six Sigma, Risk Management, SCRUM | ||

|

Software, Videos, Manuals, On-Line Certifications |